2021 March 10,

The third part of CS 311 deals with a new algorithm: ray tracing. We implement ray tracing atop the same primitive 000pixel.o foundation that we used in the first part of the course.

Because Exam B takes place on Day 22 of the course, this homework is due on Day 23, not Day 22. We ray trace spheres of uniform color.

600isometry.c: Start with a copy of 140isometry.c. Please correct an oversight of mine: In all methods except isoSetRotation and isoSetTranslation, the isometry parameter should be qualified const.

600camera.c: Start with a copy of 150camera.c. Implement the following function.

/* Assumes viewport is <0, 0, width, height>. Returns the 4x4 matrix that

transforms screen coordinates to world coordinates. Often the W-component of

the resulting world coordinates is not 1, so perform homogeneous division after

using this matrix. As long as the screen coordinates are truly in the viewing

volume, the division is safe. */

void camWorldFromScreenHomogeneous(

const camCamera *cam, double width, double height, double homog[4][4])

600ray.c: Study. This file is the beginning of the core of our ray tracer. We add to it in the days ahead.

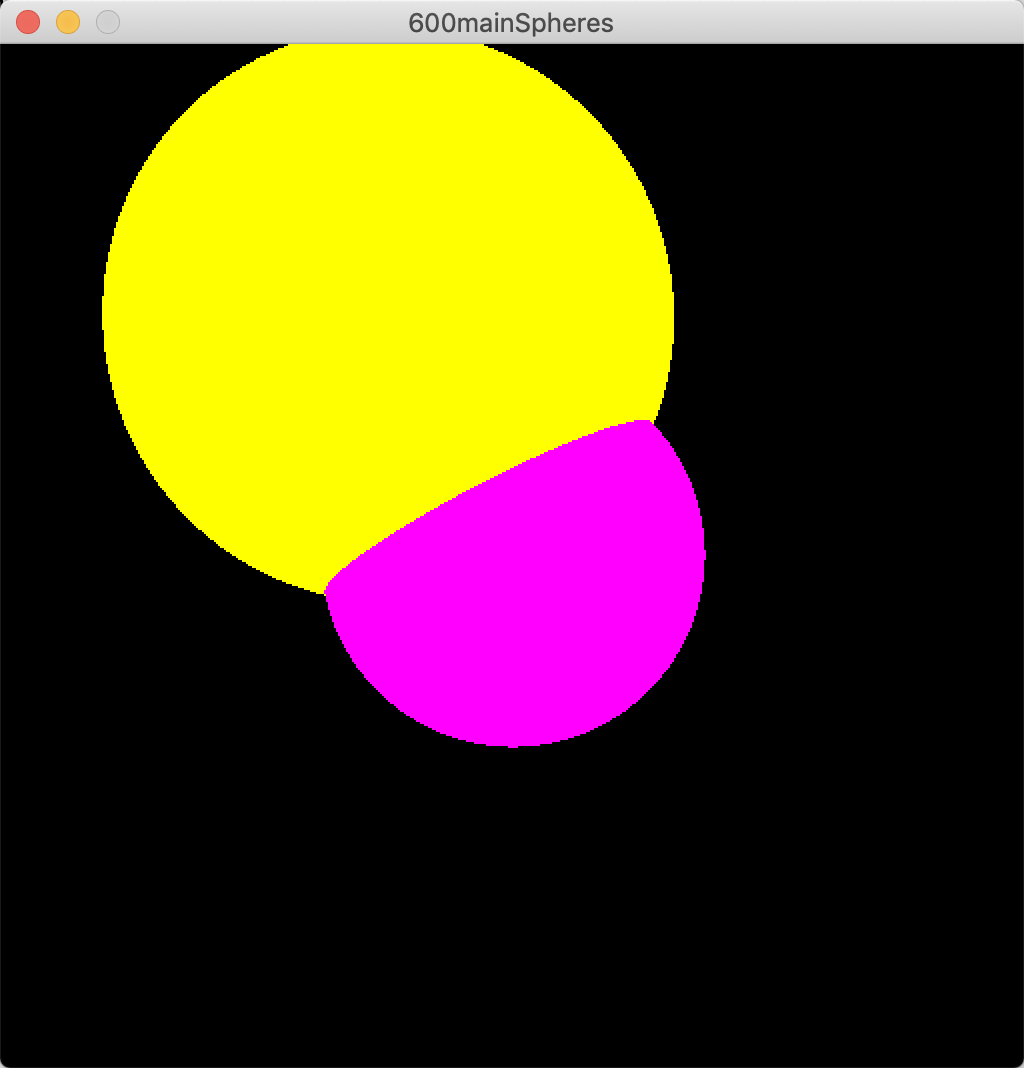

600mainSpheres.c: Implement the intersectSphere function and finish implementing the render function. The latter should use the former to render the two spheres, whose isometries, radii, and colors are already configured for you. Test. The keyboard handlers should help you inspect your work. Here's a screenshot of mine:

Clean up and hand in 600mainSpheres.c and its dependencies.

Today we re-incorporate texture mapping and Phong lighting.

610vector.c: Start with a copy of 120vector.c. Add the following function, which I have written for you.

/* Partial inverse to vec3Spherical. Always returns 0 <= rho, 0 <= phi <= pi,

and 0 <= theta <= 2 pi. In cartography this function is called the

equirectangular projection, I think. */

void vec3Rectangular(

const double v[3], double *rho, double *phi, double *theta) {

*rho = sqrt(vecDot(3, v, v));

if (*rho == 0.0) {

/* The point v is near the origin. */

*phi = 0.0;

*theta = 0.0;

} else {

*phi = acos(v[2] / *rho);

double rhoSinPhi = *rho * sin(*phi);

if (rhoSinPhi == 0.0) {

/* The point v is near the z-axis. */

if (v[2] >= 0.0) {

*rho = v[2];

*phi = 0.0;

*theta = 0.0;

} else {

*rho = -v[2];

*phi = M_PI;

*theta = 0.0;

}

} else {

/* This is the typical case. */

*theta = atan2(v[1], v[0]);

if (*theta < 0.0)

*theta += 2.0 * M_PI;

}

}

}

610mainTexturing.c: Start with a copy of 600mainSphere.c. Include 610vector.c and 040texture.c. Initialize and destroy a texture in the appropriate places. Implement the following function using isoUntransformPoint, vec3Rectangular, and texSample. The texture coordinates should probably be s = φ / π and t = θ / (2 π). Then use the following function to decide the pixel color in render. I recommend that you modulate the uniform color with the sampled color. See my screenshot below.

/* Given a sphere described by an isometry, radius, color, and texture. Given

the query and response that prompted this request for color. Outputs the RGB

color. */

void colorSphere(

const isoIsometry *iso, double radius, const double color[3],

const texTexture *tex, rayQuery query, rayResponse resp,

double rgb[3])

Let's get Phong lighting working again. For simplicity, let's continue to use a directional (rather than positional) light.

620ray.c: In a copy of 600ray.c, implement the following functions. Compared to our earlier treatment of lighting, we make one minor tweak to the specular reflection calculation. Instead of reflecting dlight across dnormal and comparing it to dcamera, we reflect dcamera across dnormal and compare it to dlight. (Study question: Why are they equivalent, and why do we choose this version now?)

/* Calculates the sum of the diffuse and specular parts of the Phong reflection

model, storing that sum in the rgb argument. Directions are unit vectors. They

can be either local or global, as long as they're consistent. dRefl is the

reflected camera direction. */

void rayDiffuseAndSpecular(

const double dNormal[3], const double dLight[3], const double dRefl[3],

const double cDiff[3], const double cSpec[3], double shininess,

const double cLight[3], double rgb[3])

/* Given a (not necessarily unit) vector v and a unit vector n, reflects v

across n, storing the result in refl. The output can safely alias the input. */

void rayReflect(int dim, const double v[], const double n[], double refl[])

620mainLighting.c: Start with a copy of 610mainTexturing.c. Edit your colorSphere function to compute dnormal and the reflected dcamera, call rayDiffuseAndSpecular, and add some ambient light. Marshal your data sources as you like. For example, in your initialization code for lights and camera, you could have globals for dlight and clight. You could use the texture color for cdiff and the uniform color for cspec. That's what I did in the first screenshot below. In the second screenshot, I switched the roles of cdiff and cspec. Notice the gash across the specular highlight.

Clean up and hand in 610mainTexturing.c, 620mainLighting.c, and their dependencies.

Today's assignment is not very long. We implement two lighting effects — shadows and mirrors — which are complicated in rasterization but pretty simple in ray tracing. Along the way, we reorganize our code a bit, so that we can make recursive calls to the ray-tracing algorithm.

I break this task into two baby steps. Of course, you are free to combine them, if you think that they're too small.

630mainShadows.c: In a copy of 620mainLighting.c, implement the following function. For now, it simply accesses our global sphere variables; later we abstract the spheres better. Because there are only two spheres in our scene, the index will always be 0 or 1. In render, use this function to determine which sphere was hit, before asking that sphere for its color. Test. You should get the same imagery as you did earlier, because we haven't added anything new yet.

/* Intersects the ray with every object in the scene. If no object is hit in [tStart, tEnd], then the response signals rayNONE. If any object is hit, then the response is the response from the nearest object, and index is the index of that object in the scene's list of bodies (0, 1, 2, ...). */ rayResponse intersectScene(rayQuery query, int *index)

630mainShadows.c: In colorSphere, use intersectScene to determine whether diffuse and specular lighting should be included. See below for a screenshot of mine.

Now we make one of the spheres a mirror. (Do not make both spheres mirrors. Which two bad things would happen?)

640mainMirrors.c: In a copy of 630mainShadows.c, implement the following function using intersectScene and colorSphere. In render, use this function to determine the color of each pixel. To clarify, render should be quite simple now: For each pixel, it should construct a query, call colorScene, and then call pixSetRGB. Test. You should get the same imagery as you did earlier, because we haven't added anything new yet.

/* Given a ray query, tests the entire scene, setting rgb to be the color of whatever object that query hits (or the background). */ void colorScene(rayQuery query, double rgb[3])

640mainMirrors.c: Make a copy of colorSphere named colorSphereMirror (or whatever you want). Have colorScene call colorSphere for one sphere and colorSphereMirror for the other. Test. You should get the same imagery.

640mainMirrors.c: Edit colorSphereMirror to implement a mirror effect, by calling colorScene with a query in the reflected camera direction. We are now in a situation of mutual recursion: colorSphereMirror calls colorScene and vice-versa. The C compiler complains. To fix the complaint, add the following line of code above colorSphereMirror. Then your mirroring should work. See below for my screenshot. (I moved the spheres, so that they no longer intersect, to make the effect clearer.)

void colorScene(rayQuery query, double rgb[3]);

Clean up and hand in 630mainShadows.c and 640mainMirrors.c.

Today we abstract two key features: how the algorithm depends on the body's geometry, and how the algorithm depends on the body's material. It might look like a lot of work, but most of the steps are very small, as in "move code from here to there and tweak it". (Also I have postponed the third chunk of work to next time.)

First we move the calculation of normal vector and texture coordinates from the sphere coloration functions into the sphere intersection function. This small tweak has big implications for our software design.

650ray.c: In a copy of 620ray.c, add the following members to the bottom of the rayResponse structure.

/* If the intersection code is not rayNONE, then contains the unit

outward-pointing normal vector at the intersection point, in world

coordinates. */

double normal[3];

/* If the intersection code is not rayNONE, then contains the texture

coordinates at the intersection point. */

double texCoords[2];

650mainAbstracted.c: Edit your sphere-intersection function so that its rayResponse contains the correct normal vector and texture coordinates. (You don't really need to write new code. You just have to copy code from one of your sphere coloration functions and tweak it.) Then edit your sphere coloration functions to use the normal vector and texture coordinates from rayResponse rather than recomputing them. Test. Your imagery should be exactly as it was before this change.

650mainAbstracted.c: If you did the preceding work correctly, then your sphere coloration functions no longer have anything to do with spheres. Rather, they are about the materials that the spheres are made of. Rename them to something like colorPhong and colorMirror. Test.

Continuing the preceding work, we now abstract the material data from the calculations that use those data.

660ray.c: In a copy of 650ray.c, add the following data type.

typedef struct rayMaterial rayMaterial;

struct rayMaterial {

/* In every case, the ray tracer should use cDiff to add some ambient light

to the fragment color. If hasDiffAndSpec != 0, then the ray tracer should

also calculate the rest of the Phong lighting model and add the result into

the fragment color. If hasDiffAndSpec == 0, then cSpec is effectively black,

but cDiff still matters for the ambient lighting. */

int hasDiffAndSpec;

double cDiff[3], cSpec[3], shininess;

/* If hasMirror != 0, then the ray tracer should send out a mirror ray, get

its color, modulate that color by cMirror, and add the result into the

fragment color. If hasMirror == 0, then cMirror is effectively black. */

int hasMirror;

double cMirror[3];

};

660mainAbstracted.c: Implement the following function, by combining code from your two coloration functions. (Its name suggests that it should be in ray.c. We move it there later.) Test. You should get the same imagery as you did earlier, because you are not yet using this function. You're just making sure it compiles.

/* Given a query, the resulting response, and the resulting material, computes

the fragment's final RGB color. As of the 660 version, this function is allowed

to access the scene (bodies and lights) through global variables. */

void rayColor(

rayQuery query, rayResponse resp, rayMaterial mat, double rgb[3])

660mainAbstracted.c: Edit your two coloration functions so that each one constructs a rayMaterial and then calls rayColor to do the actual coloration work. The material properties should be rigged so that you get exactly the same imagery as you did earlier. Test.

670mainAbstracted.c: In a copy of 660mainAbstracted.c, edit your mirror coloration function so that its material is not a pure mirror but also has some ambient lighting (and some diffuse and specular, if you like). The point of this exercise is to test whether rayColor combines effects correctly. Here's a screenshot of mine:

670mainAbstracted.c: Move the call to rayColor out of the coloration functions, as follows. First, edit your coloration functions so that, instead of calling rayColor on the material, they simply return the material. Elsewhere — wherever you call those coloration functions — follow them with a call to rayColor. Test. You should get the same imagery.

Clean up and hand in 650mainAbstracted.c, 660mainAbstracted.c, 670mainAbstracted.c, and their dependencies.

Today we finish the abstraction tasks that we started in the last assignment. Then we implement planes.

Our first task is: The objects in our scene should manifest as an array of bodies. Any function that needs these bodies should be given them explicitly as arguments, rather than accessing them implicitly as global variables.

680body.c: Skim. This file defines a body: a geometric shape, of a prescribed material, positioned in the scene. Cylinders are already handled for you. Implement the sphere intersection function by copying and tweaking a function that you already have.

680mainAbstracted.c: Start with a copy of 670mainAbstracted.c. You should have two material shaders called something like colorPhong and colorMirror. Make a copy of each one. Edit the copies so that they have the same interface (inputs and outputs) as the getMaterial member of bodyBody. Edit their implementations accordingly. Then, in the appropriate places, declare, initialize, and destroy an array of two bodies: one a cylinder with one material shader, and the other a sphere with the other material shader. Test. You should get the same imagery, because you are not yet using these new bodies.

680mainAbstracted.c: Unfortunately, we have to make several changes simultaneously now. You have a function that intersects a ray with the scene (intersectScene?), a function that converts a material into a final fragment color (rayColor), and a function that determines the color of any ray cast into the scene (colorScene?). In each of these three functions, insert two new arguments at the start of their inputs: int bodyNum, const bodyBody *bodies[]. Change the implementations to use these inputs instead of accessing anything about spheres or bodies globally. You'll also have to tweak other code that calls these three functions, such as your render function. Here's a screenshot of mine:

680mainAbstracted.c: At this point you might have a lot of orphaned code, that isn't being used any more. Take this opportunity to remove it.

Our next task is: We need to be able to cut off recursive calls to the ray tracer, when they get too deep.

690mainRecursive.c: You have a function that converts a material into a final fragment color (rayColor) and a function that determines the color of any ray cast into the scene (colorScene?). In each of these two functions, insert a new argument at the start of their inputs: int recNum. When the latter function calls the former function, it just passes along its recNum value. But when the former function calls the latter function, it passes a decremented recNum value. And if that decremented value is negative, then it doesn't make the call at all. In your render function, start the recursion with a recNum of 1 (or 2 or 3). Test. You should get the same imagery as you did earlier. However, if you start the recursion with a recNum of 0, then the mirror effect should automatically turn off.

Now that we have recursion under control, we can safely add light transmission. But let's delay that until the next assignment. Let's instead implement another geometric type: planes. It doesn't take long. Recall from class that, in its local coordinates, a plane is the X-Y-plane with unit outward-pointing normal going in the positive Z-direction.

700body.c: Start with a copy of 680body.c. Implement planes by writing functions bodyIntersectPlane and bodyInitializePlane. Mimic the code for spheres and cylinders, except for the radius part. (Unlike a sphere or cylinder, a plane does not have a radius. In fact, it doesn't need to use any of its auxiliaries. The auxiliaries are just for the user.)

700mainPlanes.c: Start with a copy of 690mainRecursion.c. Add a plane body to the sphere and cylinder bodies that you already have. Give it whatever material you want (within reason). Here's a screenshot of mine:

Clean up and hand in 680mainAbstracted.c, 690mainRecursive.c, 700mainPlanes.c, and their dependencies.

Let's finally implement transmission of light through translucent bodies. Optionally you can add another translucency effect. (I'd also like to abstract the lights, implement meshes, and optimize the meshes, but there's no time.)

710ray.c: In a copy of 660ray.c, add members hasTrans, cTrans, and indexRefr to rayMaterial. The idea is that, if the material is transmissive, then a refracted ray is sent out, its color is modulated by the material's transmissive color, and the result is added onto the other light contributions.

710mainTransmission.c: Start with a copy of 700mainPlanes.c. Edit your material shaders to include transmission data in rayMaterial. Make a least one of your materials transmissive. (Arrange your scene so that your transmissive body does not touch or intersect any other bodies.) In rayColor, implement refraction based on Snell's law, assuming that the material outside the bodies is vacuum or air. (Don't forget the correction that I mentioned today: sin θinc = dinc . dtan / |dinc|.) I myself wrote a helper function, rayRefract, to play the same role that rayReflect plays in mirroring. In your render function, you might want to start with a recNum of at least 3. (Why?) During testing, it might help to try rayMaterial.indexRefr equalling 1; then there should no refraction. Here's a screenshot of mine, with the plane mirroring the cylinder and the sphere refracting both of them:

Clean up and hand in 710mainTransmission.c and its dependencies.

This work is optional. Even if you don't write the code, you are expected to understand the algorithm.

720mainTranslucency: Implement the translucency algorithm discussed today in class. Namely, whenever the refracted ray is inside the translucent body, the light is modulated by ctransλ, where λ is the distance traveled through the body. Whenever the refracted ray is outside the body, the light is not modulated at all. Here's a screenshot of mine, with rayMaterial.indexRefr equalling 1 and ctrans being gray. Notice that the light coming through the middle of the sphere is darker than the light coming through its fringes.

Here are the further reading ideas that we discuss today in class.

I have posted Exam C Preparation elsewhere, in case you're looking for it here.